TAOBench: Running social media workloads on PlanetScale

As PlanetScale expands its customer base, our Tech Solutions team often gets to collaborate with companies trying to evaluate whether PlanetScale fits their existing development stack’s requirements. Understanding the impact of database performance and how it relates to an application’s overall performance profile can be a complicated exercise, so being able to reference standardized benchmark workloads can be helpful to build a deeper understanding of what to expect.

Last week, we published a first taste of the work we’ve been doing in this area: a short introduction to how PlanetScale and Vitess can be used to linearly scale a workload like TPC-C to one million queries per second. Focusing purely on the number itself is meaningless: in theory, the sky is the limit, and there are many Vitess users out there with workloads that scale to many times that size. The real value of this exercise lies in showcasing how workloads like TPC-C are able to scale linearly and predictably, without compromising on relational and transactional requirements.

The TPC-C benchmark has had a very long life, and has remained remarkably relevant until this day, but there are scenarios it doesn’t cover. Audrey Cheng and her team at University of California, Berkeley identified a real gap when it comes to available synthetic benchmarks for a more recent, but highly pervasive workload type: social media networks. In collaboration with engineers at Meta, she has published two white papers. The first focuses on analyzing the challenges of Meta’s overall workload composition. The second, presented this week, summarizes their proposal for an open source benchmark called TAOBench, which applies these principles and is compatible with many modern databases today.

Since Vitess powers some of the largest relational workloads on the internet today, their efforts provided us with the perfect opportunity to collaborate on a deeper dive into this particular workload type. While Cheng and her team are presenting the release of their second white paper at the Very Large Data Bases conferences this week, PlanetScale have added TAOBench to our arsenal of standardized benchmarks as well, and will be publishing more in-depth articles about our results over the coming weeks.

For now, let’s summarize the benchmark itself and PlanetScale’s published results.

The workload and dataset composition#

The TAOBench team spent significant time analyzing a representative slice of Meta’s workload and summarized its key characteristics into two main scenarios:

- Workload A (short for Application) focuses specifically on a transactional subset of the queries

- Workload O (short for Overall) encompasses a more generalized profile of the TAO workload

In practice, the behavior of these workloads may come across as somewhat synthetic and reductionist, but taking some time to read the white papers will clarify how the two formulas end up very closely resembling the real world behavior observed at Meta.

Objects and edges

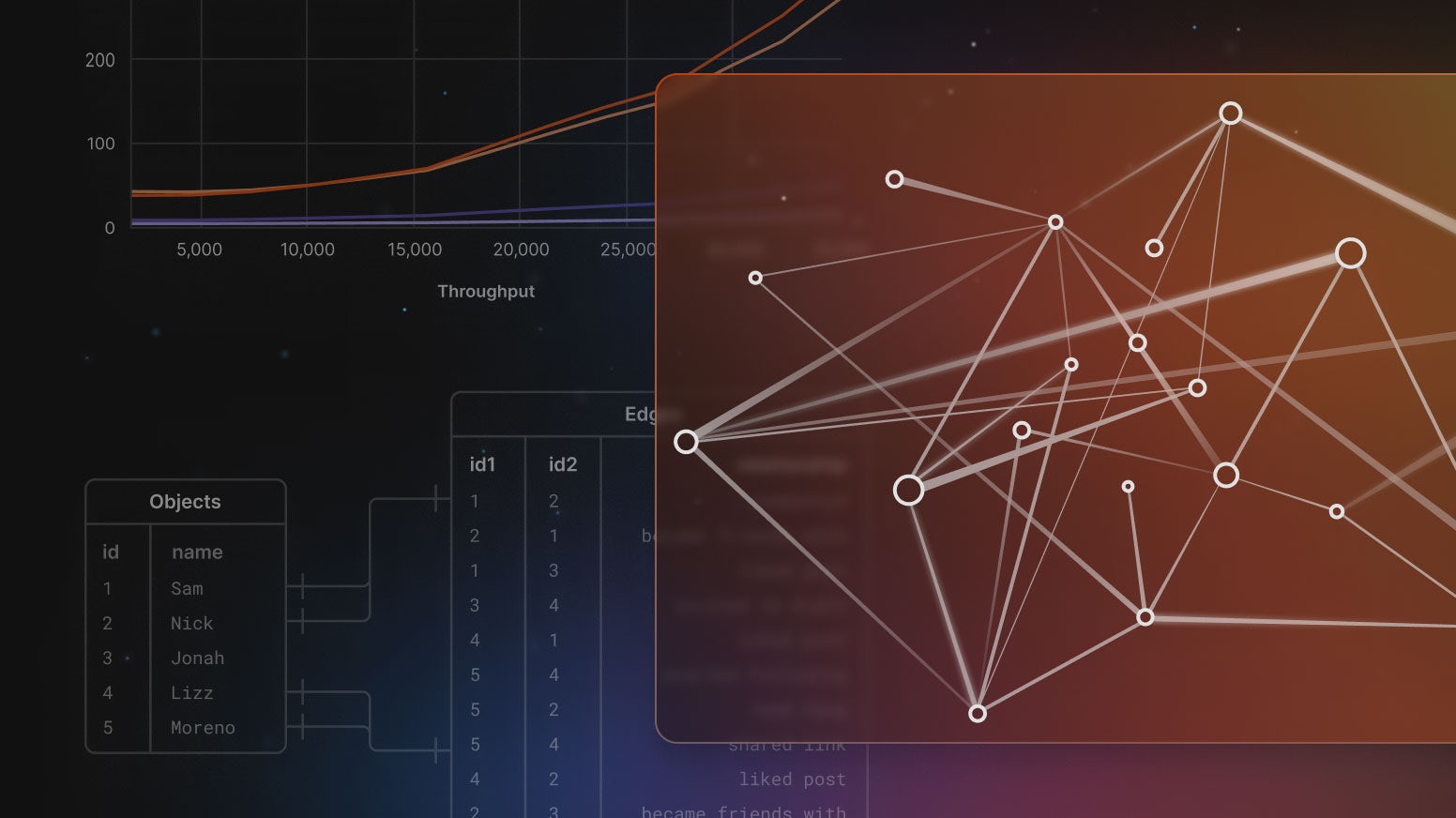

The schema for TAOBench is straightforward: it consists of 2 tables, one called objects and one called edges, concepts that loosely translate to the social graph of entities (think “users”, “posts”, “pictures”, etc.) and to the various types of relationships they have with each other (think “likes”, “shares”, “friendships”, etc.).

In simple relational database terms: The edges table can be viewed as a “many-to-many” relationship table that links rows in objects to other rows in objects.

Data composition matters

The statistical distribution of data in both tables is dependent on the chosen workload type (A or O), so data should be reloaded when switching between them. Focusing the workload around these two simplified concepts allows the benchmark to simulate typical “hot row” scenarios that can be particularly challenging for relational databases to handle. Think of what happens when something goes viral: a thundering herd of users comes through to interact with a specific piece of content posted somewhere. On the database level, beyond a sudden surge in connections, this can also translate into various types of locks centered around the backing rows for that piece, which can have rippling effects that ultimately translate to slower content access times for the users on the platform.

The TAOBench test timeline

The TAOBench workload executes in three distinct phases:

- During the “load” phase, it performs bulk inserts, populating the objects and edges tables according to the chosen workload scenario.

- The “bulk reads” phase (which is initiated at the start of any real benchmark run) performs very aggressive range scans across the entire dataset to serve as general “warmup” to whichever caching mechanisms may be in place, and also aggregates the necessary statistical information to feed into the experiments themselves. This phase is not measured, but can be extremely punishing to the underlying infrastructure.

- The “experiments” phase accepts a set of predefined concurrency levels and runtime operation targets to help scale the chosen workload to various sizes of infrastructure.

PlanetScale’s results and initial conclusions#

The results published in Cheng’s white paper were independently measured by the TAOBench team against PlanetScale infrastructure. Since the benchmark code has been made publicly available, PlanetScale has been able to verify them internally. Revisiting our earlier considerations about the “One million queries per second with MySQL” blog post, the numbers themselves are by no means approaching the limits of what can be accomplished on our infrastructure. Rather, they represent what is achieved by imposing an explicit resource limit to the cluster.

The limit Cheng’s team set for their tests was 48 CPU cores. Since the test was performed against PlanetScale’s multi-tenant serverless database offering where certain infrastructural resources are shared across multiple tenants, we underprovisioned the “query path” of the cluster itself to use a maximum of 44 CPU cores out of the requested 48 maximum. The other 4 cores would be used for multi-tenant aspects of the infrastructure, such as edge load balancers. Stay tuned for a more in-depth blog post of how the resources were allocated to the various Vitess components we provisioned and for an exploration of how the various OS-level metrics looked.

Our key takeaway from the initial results as published is the sustained stability of PlanetScale clusters under even the most extreme resource pressure. As is to be expected in an artificially constrained environment, TAOBench’s “experiments” phase uses gradually increasing concurrency pressure to bring the target database to its knees, and once 44 cores are all running at 100%, throughput (measured in requests per second) is expected to hit a ceiling while average response times increase.

Most systems have some stretch, even while running at what looks like 100% CPU. With ever increasing workload pressure, though, every piece of software eventually starts experiencing failures, by way of thrashing, congestive collapse or other effects.

Distributed database systems are not magically protected from these failure scenarios. If anything, increased infrastructural complexity and the potential for competition amongst different types of resources generally translates to many more interesting ways things can break down. Observing how software behaves in these types of failure scenarios can reveal a lot about what might be expected in those situations that are impossible to plan for. Finding the balance between resource efficiency and graceful failure handling requires equal parts of software maturity and ongoing infrastructural engineering excellence.

That is why enterprise organizations are increasingly choosing to have their database workloads managed by PlanetScale. Don’t hesitate to reach out if you’d like to talk about how we can apply these principles to your use case.